Shortest-Job-First (SJF) is a non-preemptive discipline in which waiting job (or process) with the smallest estimated run-time-to-completion, is run next. In other words, when CPU is available, it is assigned to the process that has smallest next CPU burst. The SJF scheduling is especially appropriate for batch jobs for which the run times are known in advance. Since the SJF scheduling algorithm gives the minimum average time for a given set of processes, it is probably optimal. The SJF algorithm favors short jobs (or processors) at the expense of longer ones.

Example :

Process Burst time Arrival

P1 6 0

P2 8 0

P3 7 0

P4 3 0

Gantt chart: Order P1, P2, P3, P4

| P4 | P1 | P3 | P2 |

0 3 9 16 24

Average waiting time: (0+3+16+9)/4 = 7

With FCFS: (0+6+(6+8)+(6+8+7))/4 = 10.25

Problem: SJF minimizes the average wait time because it services small processes before it services large ones. While it minimizes average wiat time, it may penalize processes with high service time requests. If the ready list is saturated, then processes with large service times tend to be left in the ready list while small processes receive service. In extreme case, where the system has little idle time, processes with large service times will never be served. This total starvation of large processes may be a serious liability of this algorithm.

Wednesday, August 26, 2009

Shortest-Job-First (SJF) Scheduling

Posted by

Sunflower

at

8/26/2009 09:12:00 PM

0

comments

![]()

Labels: Process, Scheduling algorithm, Shortest-Job-First, SJF, Waiting time

|

| Subscribe by Email |

|

CPU / Process Scheduling

CPU scheduling is the basis of multi-programmed operating systems. By switching the CPU among processes, the operating system can make the computer more productive. A multiprogramming operating system allows more than one process to be loaded into the executable memory at a time and for the loaded process to share the CPU using time-multiplexing.

Goals for Scheduling :

* Utilization/Efficiency: keep the CPU busy 100% of the time with useful work.

* Throughput: maximize the number of jobs processed per hour.

* Turnaround time: from the time of submission to the time of completion, minimize the time batch users must wait for output.

* Waiting time: Minimize the waiting time i.e. sum of times spent in ready queue.

* Response Time: time from submission till the first response is produced, minimize response time for interactive users.

* Fairness: make sure each process gets a fair share of the CPU.

Some goals can be met by incorporating a notion of priority into a “base” scheduling discipline. Each job in the ready pool has an associated priority value; the scheduler favors jobs with higher priority values.

External priority values:

• imposed on the system from outside.

• reflect external preferences for particular users or tasks.

“All jobs are equal, but some jobs are more equal than others.”

• Example: Unix nice system call to lower priority of a task.

• Example: Urgent tasks in a real-time process control system.

Internal priority: system adjusts priority values internally as as an implementation technique within the scheduler. It improves fairness, resource utilization, freedom from starvation.

• drop priority of jobs consuming more than their share

• boost jobs that already hold resources that are in demand

e.g., internal sleep primitive in Unix kernels

• boost jobs that have starved in the recent past

• typically a continuous, dynamic, readjustment in response to observed conditions and events may be visible and controllable to other parts of the system.

Scheduling policies may be preemptive or non-preemptive.

* Non-Preemptive: Non-preemptive algorithms are designed so that once a process enters the running state(is allowed a process), it is not removed from the processor until it has completed its service time ( or it explicitly yields the processor).

* Preemptive: Preemptive algorithms are driven by the notion of prioritized computation. The process with the highest priority should always be the one currently using the processor. If a process is currently using the processor and a new process with a higher priority enters, the ready list, the process on the processor should be removed and returned to the ready list until it is once again the highest-priority process in the system.

Posted by

Sunflower

at

8/26/2009 08:32:00 PM

0

comments

![]()

Labels: CPU, CPU Scheduling, Non-preemptive, Preemptive, Priority, Process

|

| Subscribe by Email |

|

Overview of Threads

A thread is an encapsulation of the flow of control in a program. Most people are used to writing single-threaded programs - that is, programs that only execute one path through their code "at a time". Multi-threaded programs may have several threads running through

different code paths "simultaneously".

A thread is similar to the sequential programs: a single thread also has a beginning, an end, a sequence, and at any given time during the run time of the thread there is a single point of execution. However, a thread itself is not a program--it cannot run on its own--but runs within a program.

Why use threads?

Threads should not alter the semantics of a program. They simply change the timing of operations. As a result, they are almost always used as an elegant solution to performance related problems. Here are some examples of situations where you might use threads :

* Doing lengthy processing: When a windows application is calculating it cannot process any more messages. As a result, the display cannot be updated.

* Doing background processing: Some tasks may not be time critical, but need to execute continuously.

* Doing I/O work: I/O to disk or to network can have unpredictable delays. Threads allow you to ensure that I/O latency does not delay unrelated parts of your application.

In the program, some operations incur a potentially large delay or CPU hogging, but this delay or CPU usage is unacceptable for other operations; they need to be serviced now.

* Making use of multiprocessor systems: You can't expect one application with only one thread to make use of two or more processors! Chapter 3 explains this in more detail.

* Efficient time sharing: Using thread and process priorities, you can ensure that everyone gets a fair allocation of CPU time.

Similarities between process and threads :

* Like processes threads share CPU and only one thread active (running) at a time.

* Like processes, threads within a processes, threads within a processes execute sequentially.

* Like processes, thread can create children.

* And like process, if one thread is blocked, another thread can run.

Differences between process and threads :

* Unlike processes, threads are not independent of one another.

* Unlike processes, all threads can access every address in the task.

* Unlike processes, thread are design to assist one other. Note that processes might or might not assist one another because processes may originate from different users.

Posted by

Sunflower

at

8/26/2009 03:32:00 PM

0

comments

![]()

Labels: Difference, Process, Similarity, Threads, why to use threads

|

| Subscribe by Email |

|

Overview Of Process

A process is an activity which takes place over time and which has a precise aim regarding the result to be achieved. The concept of a process is hierarchical which means that a process may consist of a partially ordered set of sub-processes. A process is rather abstract. It describes the essentials of the purpose, structure, rationale, roles and timing, leaving plenty of implementation freedom. The power of a process is its abstraction, which enables its application in a wide range of applications, by tailoring its implementation to the specific application. A process can be tailored and elaborated in one or more procedures, which describe cookbook-like what need to be done when and by whom.

The process has been given many definitions for instance :

* A program in Execution.

* An asynchronous activity.

* The 'animated sprit' of a procedure in execution.

* The entity to which processors are assigned.

* The 'dispatchable' unit.

In Process model, all software on the computer is organized into a number of sequential processes. A process includes PC, registers, and variables. Conceptually, each process has its own virtual CPU. In reality, the CPU switches back and forth among processes.

A process goes through a series of discrete process states :

* New State: The process being created.

* Running State: A process is said to be running if it has the CPU, that is, process actually using the CPU at that particular instant.

* Blocked (or waiting) State: A process is said to be blocked if it is waiting for some event to happen such that as an I/O completion before it can proceed. Note that a process is unable to run until some external event happens.

* Ready State: A process is said to be ready if it use a CPU if one were available. A ready state process is runable but temporarily stopped running to let another process run.

* Terminated state: The process has finished execution.

A process in an operating system is represented by a data structure known as a process control block (PCB) or process descriptor. The PCB contains important information about the specific process including :

* The current state of the process i.e., whether it is ready, running, waiting, or whatever.

* Unique identification of the process in order to track "which is which" information. * A pointer to parent process.

* Similarly, a pointer to child process (if it exists).

* The priority of process (a part of CPU scheduling information).

* Pointers to locate memory of processes.

* A register save area.

* The processor it is running on.

Posted by

Sunflower

at

8/26/2009 03:16:00 PM

0

comments

![]()

Labels: PCB, Process, Process Control Block, Stages, state

|

| Subscribe by Email |

|

Tuesday, August 25, 2009

First-Come-First-Served (FCFS) Scheduling

First-Come-First-Served algorithm is the simplest scheduling algorithm is the simplest scheduling algorithm. Processes are dispatched according to their arrival time on the ready queue. Being a non-preemptive discipline, once a process has a CPU, it runs to completion. The FCFS scheduling is fair in the formal sense or human sense of fairness but it is unfair in the sense that long jobs make short jobs wait and unimportant jobs make important jobs wait.

FCFS is more predictable than most of other schemes since it offers time. FCFS scheme is not useful in scheduling interactive users because it cannot guarantee good response time. The code for FCFS scheduling is simple to write and understand. One of the major drawback of this scheme is that the average time is often quite long.

CHARACTERISTICS :

o Non-preemptive.

o Ready queue is a FIFO queue.

o Jobs arriving are placed at the end of queue.

o Dispatcher selects first job in queue and this job runs to completion of CPU burst.

Advantages:

- Simple.

- Low overhead.

Disadvantages:

- Inappropriate for interactive systems.

- Large fluctuations in average turnaround time are possible.

Example :

Process Burst Time

P1 24

P2 3

P3 3

Suppose that the processes arrive in the order: P1 , P2 , P3.

Waiting time for P1 = 0; P2 = 24; P3 = 27

Average waiting time: (0 + 24 + 27)/3 = 17

Suppose that the processes arrive in the order P2 , P3 , P1.

Waiting time for P1 = 6; P2 = 0; P3 = 3

Average waiting time: (6 + 0 + 3)/3 = 3

Much better than previous case.

Posted by

Sunflower

at

8/25/2009 08:01:00 PM

0

comments

![]()

Labels: Algorithm, CPU, FCFS, First-Come-First-Served, Process

|

| Subscribe by Email |

|

Overview Of Mutual Exclusions in Operating Systems

A way of making sure that if one process is using a shared modifiable data, the other processes will be excluded from doing the same thing.

Formally, while one process executes the shared variable, all other processes desiring to do so at the same time moment should be kept waiting; when that process has finished executing the shared variable, one of the processes waiting; while that process has finished executing the shared variable, one of the processes waiting to do so should be allowed to proceed. In this fashion, each process executing the shared data (variables) excludes all others from doing so simultaneously. This is called Mutual Exclusion.

Mutual exclusion (often abbreviated to mutex) algorithms are used in concurrent programming to avoid the simultaneous use of a common resource, such as a global variable, by pieces of computer code called critical sections. A critical section is a piece of code where a process or thread accesses a common resource. The critical section by itself is not a mechanism or algorithm for mutual exclusion. A program, process, or thread can have critical section in it without any mechanism or algorithm, which implements mutual exclusion.

Examples of such resources are fine-grained flags, counters or queues, used to communicate between code that runs concurrently, such as an application and its interrupt handlers. The problem is acute because a thread can be stopped or started at any time.

How Mutual Exclusion is Done ?

We need to stop the two threads from working on the same data at the same time. The most common way to do this today is by using locks. A lock can be either locked or unlocked. As long as you do not forget to lock or unlock the door, this algorithms guarantees mutual exclusion and protects the so called critical region.

C:

1. omp_set_lock (&my_lock);

2. i++;

3. omp_unset_lock (&my_lock);

Actually, the lock needs to be initialized beforehand and destroyed sometime afterwards as well, but that's not too difficult either.

C:

1. #pragma omp critical

2. {

3. i++;

4. }

Or even simpler, like this:

C:

1. #pragma omp atomic

2. i++;

This basically does the same thing, except you do not need to worry about initialization and destruction of the lock and you cannot forget to unlock the mutex accidentally.

Posted by

Sunflower

at

8/25/2009 07:37:00 PM

0

comments

![]()

Labels: Algorithm, Deadlocks, Lock, Mutex, Mutual Exclusions, Unlock

|

| Subscribe by Email |

|

Overview of Race Conditions in Operating Systems

A race condition happens when a system depends on something being done outside of its control before the system reaches a point where it needs to use the results of that something, and there's no way to guarantee that that something will actually be finished when the system needs it.

For example, suppose there's a person who runs a program every morning that prints letters that have been queued throughout the previous day. There's another person in another department who runs a program that queues a letter, and then offers to let the person modify it while it's sitting in the printing queue. If the person runs this program too early in the day (before the printing program gets run), they're essentially in a "race" to finish their work before the printing program runs.

Symptoms Of Race Condition :

The most common symptom of a race condition is unpredictable values of variables that are shared between multiple threads. This results from the unpredictability of the order in which the threads execute. Sometime one thread wins, and sometime the other thread wins. At other times, execution works correctly. Also, if each thread is executed separately, the variable value behaves correctly.

While many different devices are configured to allow multitasking, there is still an internal process that creates a hierarchy of functions. In order for certain functions to take place, other functions must occur beforehand. While the end user perceives that all the functions may appear to be taking place at the same time, this is not necessarily the case.

One common example of a race condition has to do with the processing of data. If a system receives commands to read existing data while writing new data, this can lead to a conflict that causes the system to shut down in some manner. The system may display some type of error message if the amount of data being processed placed an undue strain on available resources, or the system may simply shut down. When this happens, it is usually a good idea to reboot the system and begin the sequence again. If the amount of data being processed is considerable, it may be better to allow the assimilation of the new data to be completed before attempting to read any of the currently stored data.

Posted by

Sunflower

at

8/25/2009 07:15:00 PM

0

comments

![]()

Labels: Deadlocks, Devices, Operating Systems, Race Conditions, Symptoms, Threads

|

| Subscribe by Email |

|

Monday, August 17, 2009

Introduction to Distributed Database Systems

A distributed database appears to a user as a single database but is, in fact, a set of databases stored on multiple computers. The data on several computers can be simultaneously accessed and modified using a network. Each database server in the distributed database is controlled by its local DBMS, and each cooperates to maintain the consistency of the global database.

Clients, Servers, and Nodes :

A database server is the software managing a database, and a client is an application that requests information from a server. Each computer in a system is a node. A node in a distributed database system can be a client, a server, or both.

A client can connect directly or indirectly to a database server.

Site Autonomy :

Site autonomy means that each server participating in a distributed database is administered independently (for security and backup operations) from the other databases, as though each database was a non-distributed database. Although all the databases can work together, they are distinct, separate repositories of data and are administered individually. Some of the benefits of site autonomy are as follows:

- Nodes of the system can mirror the logical organization of companies or cooperating organizations that need to maintain an "arms length" relationship.

- Local data is controlled by the local database administrator. Therefore, each database administrator's domain of responsibility is smaller and more manageable.

- Independent failures are less likely to disrupt other nodes of the distributed database. The global database is partially available as long as one database and the network are available; no single database failure need halt all global operations or be a performance bottleneck.

- Failure recovery is usually performed on an individual node basis.

- A data dictionary exists for each local database.

- Nodes can upgrade software independently.

Homogenous Distributed Database Systems :

A homogenous distributed database system is a network of two or more Oracle databases that reside on one or more machines. An application can simultaneously access or modify the data in several databases in a single distributed environment. For example, a single query on local database MFG can retrieve joined data from the PRODUCTS table on the local database and the DEPT table on the remote HQ database.

Heterogeneous Distributed Database Systems :

In a heterogeneous distributed database system, at least one of the databases is a non-Oracle system. To the application, the heterogeneous distributed database system appears as a single, local, Oracle database; the local Oracle server hides the distribution and heterogeneity of the data.

The Oracle server accesses the non-Oracle system using Oracle8i Heterogeneous Services and a system-specific transparent gateway. For example, if you include a DB2 database in an Oracle distributed system, you need to obtain a DB2-specific transparent gateway so that the Oracle databases in the system can communicate with it.

Posted by

Sunflower

at

8/17/2009 04:06:00 PM

0

comments

![]()

Labels: Architecture, Data, Database Systems, Distributed

|

| Subscribe by Email |

|

GIS Data Operations and Problems in GIS

GIS applications are conducted through the use of special operators such as the following :

- Interpolation : This process derives elevation data for points at which no samples have been taken. It includes computation at single points, computation for a rectangular grid or along a contour, and so forth.

- Interpretation : Digital terrain modeling involves the interpretation of operations on terrain data such as editing, smoothing, reducing details and enhancing. Additional operations involve patching or zipping the borders of the triangle and merging, which implies combining overlapping models and resolving conflicts among attribute data.

- Proximity analysis : Several classes of proximity analysis include computations of "zones of interest" around objects.

- Raster Image processing : This process can be divided into two categories (1) map algebra which is used to integrate geographic features on different map layers to produce new maps algebraically; and (2) digital image analysis, which deals with analysis of a digital image for features such as edge detection and object detection.

- Analysis of networks : Networks occur in GIS in many contexts that must be analyzed and may be subjected to segmentations, overlays, and so on.

PROBLEMS OF GIS :

GIS is an expanding application area of databases. As a result, number of problems related to GIS applications have been generated :

- New architectures : GIS applications will need a new client-server architecture that will benefit from existing advances in RDBMS and ODBMS technology.

- Versioning and object life-cycle approach : Because of constantly evolving geographical features, GIS must maintain elaborate cartographic and terrain data - a management problem that might be eased by incremental updating coupled with update authorization schemes for different levels of users.

- Data Standards : Formalization of data transfer standards is crucial for the success of GIS.

- Matching applications and data structures.

- Lack of semantics in data structures.

Posted by

Sunflower

at

8/17/2009 03:16:00 PM

0

comments

![]()

Labels: Geographic Information System, GIS, Operators, Problems

|

| Subscribe by Email |

|

Introduction To Geographic Information Systems (GIS)

A geographic information system (GIS) integrates hardware, software, and data for capturing, managing, analyzing, and displaying all forms of geographically referenced information.

GIS software represents features on the earth, such as buildings, cities, roads, rivers, and states, on a computer. People use GIS to visualize, question, analyze, and understand this data about the world and human activity. Often, this data is viewed on a map, which provides an advantage over using spreadsheets or databases. Why? Because maps and spatial analysis can reveal patterns, point out problems, and show connections that may not be apparent in tables or text.

GIS uses layers, called "themes," to overlay different types of information, much as some static maps use mylar overlays to add tiers of information to a geographic background. Each theme represents a category of information, such as roads or forest cover. As with the old mylar maps, the layers which are underneath remain visible while additional themes are placed above.

THREE VIEWS OF A GIS :

A GIS is most often associated with a map. A map, however, is only one way you can work with geographic data in a GIS, and only one type of product generated by a GIS.

A GIS can be viewed in three ways:

- The Database View: A GIS is a unique kind of database of the world—a geographic database (geodatabase). It is an "Information System for Geography."

- The Map View: A GIS is a set of intelligent maps and other views that show features and feature relationships on the earth's surface.

- The Model View: A GIS is a set of information transformation tools that derive new geographic datasets from existing datasets.

IMPLEMENTING GIS :

Implementing GIS presents a unique set of challenges. Even the most well-funded projects can fail because of poor planning. 10-stage process for successfully deploying GIS are :

- Consider the strategic purpose.

- Plan for the planning.

- Determine technology requirements.

- Determine the end products.

- Define the system scope.

- Create a data design.

- Choose a data model.

- Determine system requirements.

- Analyze benefits and costs.

- Make an implementation plan.

APPLICATIONS OF GIS ?

Planners of all kinds—business analysts, city planners, environmental planners, and strategists from all organizations—create new patterns or reshape existing ones

every day.

1. Cartographic

- Irrigation

- Crop yield analysis

- Land evaluation

- Planning & Facilities management

- Landscape Studies

- Traffic pattern analysis

2. Digital Terrain Modeling

- Earth science resource studies

- Civil Engineering & military evaluation

- Soil surveys

- Air & Water pollution studies

- Flood control

- Water resource management

3. Geographic Objects Applications

- Car navigation systems

- Geographic market analysis

- Utility distribution & consumption

- Consumer product and services - economic analysis

Posted by

Sunflower

at

8/17/2009 02:37:00 PM

0

comments

![]()

Labels: Applications, database implementation, Geographic Information System, GIS, Maps, Views of GIS

|

| Subscribe by Email |

|

Friday, August 14, 2009

Designing of Multimedia Databases

Many inherent characteristics of multimedia data have direct and indirect impacts on the design of multimedia databases. These include : the huge size of MMDBs, temporal nature, richness of content, complexity of representation and subjective interpretation. The major challenges in designing multimedia databases arise from several requirements they need to satisfy such as the following:

- Manage different types of input, output, and storage devices.

- Handle a variety of data compression and storage formats.

- Support different computing platforms and operating systems.

- Integrate different data models.

- Offer a variety of user-friendly query systems suited to different kinds of media.

- Handle different kinds of indices.

- Develop measures of data similarity that correspond well with perceptual similarity.

- Provide transparent view of geographically distributed data.

- Adhere to real-time constraints for the transmission of media data.

- Synchronize different media types while presenting to user.

DIFFICULTIES INVOLVED WITH MULTIMEDIA DATABASES :

The difficulty of making these different types of multimedia databases readily accessible to humans is:

- The tremendous amount of bandwidth they consume.

- Creating Globally-accepted data-handling platforms, such as Joomla, and the special considerations that these new multimedia database structures require.

- Creating a Globally-accepted operating system, including applicable storage and resource management programs need to accommodate the vast Global multimedia information hunger.

- Multimedia databases need to take into accommodate various human interfaces to handle 3D-interactive objects, in an logically-perceived manner.

- Accommodating the vast resources required to utilize artificial intelligence to it's fullest potential- including computer sight and sound analysis methods.

- The historic relational databases do not conveniently support content-based searches for multimedia content.

Multimedia databases are essential for efficient management and effective use of huge amounts of data. The diversity of applications using multimedia data, the rapidly changing technology, and the inherent complexities in the semantic representation, interpretation and comparison for similarity pose many challenges. MMDBs are still in their infancy.

Posted by

Sunflower

at

8/14/2009 10:48:00 PM

0

comments

![]()

Labels: Databases, Design, Difficulties, Multimedia, Multimedia Databases

|

| Subscribe by Email |

|

Introduction to Multimedia Databases

Multimedia data typically means digital images, audio, video, animation and graphics together with text data. The acquisition, generation, storage and processing of multimedia data in computers and transmission over networks have grown tremendously in the recent past. Multimedia data are blessed with a number of exciting features. They can provide more effective dissemination of information in science, engineering , medicine, modern biology, and social sciences. It also facilitates the development of new paradigms in distance learning, and interactive personal and group entertainment. It loosely fall into three main categories:

* Static media (time-independent, i.e. images and handwriting).

* Dynamic media (time-dependent, i.e. video and sound bytes).

* Dimensional media (i.e. 3D games or computer-aided drafting programs- CAD).

TYPES OF MULTIMEDIA DATABASES :

There are numerous different types of multimedia databases :

* The Authentication Multimedia Database (also known as a Verification Multimedia Database, i.e. retina scanning), is a 1:1 data comparison.

* The Identification Multimedia Database is a data comparison of one-to-many (i.e. passwords and personal identification numbers.

* A newly-emerging type of multimedia database, is the Biometrics Multimedia Database; which specializes in automatic human verification based on the algorithms of their behavioral or physiological profile.

CONTENTS OF MULTIMEDIA DATABASES :

A multimedia database needs to manage several different types of information pertaining to the actual multimedia data. They are:

* Media data - This is the actual data representing images, audio, video that are captured, digitized, processes, compressed and stored.

* Media format data - This contains information pertaining to the format of the media data after it goes through the acquisition, processing, and encoding phases. For instance, this consists of information such as the sampling rate, resolution, frame rate, encoding scheme etc.

* Media keyword data - This contains the keyword descriptions, usually relating to the generation of the media data. For example, for a video, this might include the date, time, and place of recording , the person who recorded, the scene that is recorded, etc This is also called as content descriptive data.

* Media feature data - This contains the features derived from the media data. A feature characterizes the media contents. For example, this could contain information about the distribution of colors, the kinds of textures and the different shapes present in an image. This is also referred to as content dependent data.

Posted by

Sunflower

at

8/14/2009 09:44:00 PM

0

comments

![]()

Labels: Contents, Databases, Multimedia Databases, Types of MMDB

|

| Subscribe by Email |

|

Thursday, August 13, 2009

Data Mining as a part of Knowledge Discovery Process

Knowledge discovery in databases typically encompasses more than data mining. The knowledge discovery process comprises six phases : data selection, data cleansing, enrichment, data transformation or encoding, data mining and the reporting and display of the discovered information.

Data mining addresses inductive knowledge. Knowledge can be represented in an unstructured sense, it can be represented by rules, or propositional logic. In a structured form, it may be represented in decision trees, semantic networks, neural networks. The knowledge that is discovered during data mining can be described in 5 ways :

- Association rules : These rules correlate the presence of a set of items with another range of values for another set of variables. An association rule is of the form X=>Y where X = {x1, x2,..., xn}, and Y = {y1, y2,..., yn} are set of items, with xi and yi being distinct items for all i and j.

- Classification hierarchies : The goal is to work from an existing set of events or transactions to create a hierarchy of classes.

- Sequential patterns : A sequence of actions or events is sought. Detection of sequential patterns is equivalent to detecting association among events with certain temporal relationships.

- Patterns with time series : Similarities can be detected within the positions of the time series.

- Categorization and segmentation : A given population of events or items can be partitioned or segmented into sets of similar elements.

For most applications, the desired knowledge is a combination of the above types.

Posted by

Sunflower

at

8/13/2009 12:18:00 AM

0

comments

![]()

Labels: Data Mining, Databses, Knowledge discovery process

|

| Subscribe by Email |

|

Wednesday, August 12, 2009

Stages and Goals of Data Mining

Data Mining is an analytic process designed to explore data (usually large amounts of data - typically business or market related) in search of consistent patterns and/or systematic relationships between variables, and then to validate the findings by applying the detected patterns to new subsets of data. The process of data mining consists of three stages:

1. Initial exploration :

This stage usually starts with data preparation which may involve cleaning data, data transformations, selecting subsets of records and - in case of data sets with large numbers of variables ("fields") - performing some preliminary feature selection operations to bring the number of variables to a manageable range. Then, depending on the nature of the analytic problem, this first stage of the process of data mining may involve anywhere between a simple choice of straightforward predictors for a regression model, to elaborate exploratory analyzes using a wide variety of graphical and statistical methods.

2. Model building and validation :

This stage involves considering various models and choosing the best one based on their predictive performance. There are a variety of techniques developed to achieve that goal - many of which are based on so-called "competitive evaluation of models," that is, applying different models to the same data set and then comparing their performance to choose the best. These techniques - which are often considered the core of predictive data mining - include: Bagging (Voting, Averaging), Boosting, Stacking (Stacked Generalizations), and Meta-Learning.

3. Deployment :

The final stage involves using the model selected as best in the previous stage and applying it to new data in order to generate predictions or estimates of the expected outcome.

GOALS OF DATA MINING :

- Prediction : Data mining can show how certain attributes within the data will behave in the future. In such applications, business logic is used coupled with data mining.

- Identification : Data patterns can be used to identify the existence of an item, an event, or an activity. For example, intruders trying to break a system may be identified by the programs executed, files accessed, and CPU time per session.

- Classification : Data mining can partition the data so that different classes or categories can be identified based on combination of parameters.

- Optimization : One eventual goal of data mining may be to optimize the use of limited resources such as time, space, money, or materials and to maximize output variables such as sales or profits under a given set of constraints.

Posted by

Sunflower

at

8/12/2009 11:15:00 PM

0

comments

![]()

Labels: Classification, Data Mining, Deployment, Exploration, Goals of data mining, Identification, Model building, optimization, Prediction

|

| Subscribe by Email |

|

Tuesday, August 11, 2009

Introduction to Data Mining

Data mining is the process of discovering meaningful new correlations, patterns and trends by sifting through large amounts of data stored in repositories, using pattern recognition technologies as well as statistical and mathematical techniques.

Data mining is the analysis of (often large) observational data sets to find

unsuspected relationships and to summarize the data in novel ways that are

both understandable and useful to the data owner.

Data mining is an interdisciplinary field bringing together techniques from

machine learning, pattern recognition, statistics, databases, and visualization to

address the issue of information extraction from large data bases.

WHY MINE DATA ?

1. Commercial View :

- Lots of data is being collected and warehoused.

* Web data, e-commerce.

* Purchases at department/grocery stores.

* Bank/Credit Card transactions.

- Computers have become cheaper and more powerful

* Competitive Pressure is strong.

* Provide better, customized services for an edge.

2. Scientific View :

- Data collected and stored at enormous speeds(GB/hour).

* Remote sensors on a satellite.

* Telescopes scanning the skies.

* Micro arrays generating gene expression data.

* Scientific simulations generating terabytes of data.

- Traditional techniques infeasible for raw data.

- Data mining may help scientists :

* in classifying and segmenting data.

* in Hypothesis Formation.

SCOPE OF DATA MINING :

Data mining derives its name from the similarities between searching for valuable business information in a large database — for example, finding linked products in gigabytes of store scanner data — and mining a mountain for a vein of valuable ore. Both processes require either sifting through an immense amount of material, or intelligently probing it to find exactly where the value resides. Given databases of sufficient size and quality, data mining technology can generate new business opportunities by providing these capabilities:

- Automated prediction of trends and behaviors.

- Automated discovery of previously unknown patterns.

Automated discovery of previously unknown patterns.

* More columns : Analysts must often limit the number of variables they examine when doing hands-on analysis due to time constraints. Yet variables that are discarded because they seem unimportant may carry information about unknown patterns. High performance data mining allows users to explore the full depth of a database, without preselect a subset of variables.

* More rows : Larger samples yield lower estimation errors and variance, and allow users to make inferences about small but important segments of a population.

Posted by

Sunflower

at

8/11/2009 11:43:00 PM

0

comments

![]()

Labels: Data, Data Mining, introduction, Scope, Why data mining

|

| Subscribe by Email |

|

Monday, August 10, 2009

Functionality of Data Warehouses and Building a Data Warehouse

Data warehouses exist to facilitate complex, data-intensive, and frequent adhoc queries. The data warehouse access component supports enhanced spreadsheet functionality, efficient query processing, structured and adhoc queries, data mining, and materialized views. These offer pre-programmed functionalities such as :

- Roll-up : Data is summarized with increasing generalization.

- Drill-down : Increasing levels of details are revealed.

- Pivot : Cross tabulation is performed.

- Slice and dice : Performing projection operations on the dimensions.

- Sorting : Data is sorted by ordinal value.

- Selection : Data is available by value or range.

- Derived attributes : Attributes are computed by operations on stored and derived values.

BUILDING A DATA WAREHOUSE :

In constructing a data warehouse, builders should take a broad view of the anticipated use of the data warehouse. Acquisition of data for the warehouse involves the following steps :

- The data must be extracted from different sources.

- Data must be formatted for consistency within the data warehouse. Names, meanings, and domains of data from unrelated sources must be reconciled.

- Data must be cleaned to ensure validity.

- The data must be fitted into the data model of the data warehouse.

- The data must be loaded into the warehouse.

- How up-to-date must be data be ?

* Can the warehouse go off-line, and for how long.

* What are the data interdependencies ?

* What is the storage availability ?

* What are the distribution requirements ?

* What is the loading time ?

Data warehouses must also be designed with full consideration of the environment in which they will reside. Important design considerations include the following :

- Usage projections.

- The fit of the data model.

- Characteristics of available sources.

- Design of the metadata component.

- Modular component design.

- Design for manageability and change.

- Consideration of distributed and parallel architecture.

Posted by

Sunflower

at

8/10/2009 10:28:00 PM

0

comments

![]()

Labels: Building a data warehouse, Data Model, data warehousing, Functionality

|

| Subscribe by Email |

|

Sunday, August 9, 2009

Introduction To Data Warehousing

A data warehouse is a type of computer database that is responsible for collecting and storing the information of a particular organization. The goal of using a data warehouse is to have an efficient way of managing information and analyzing data.

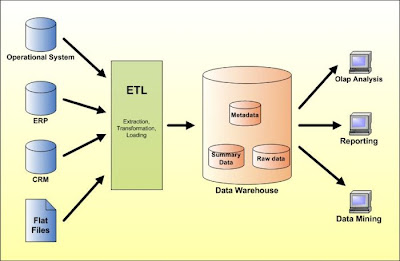

In addition to a relational database, a data warehouse environment includes an extraction, transportation, transformation, and loading (ETL) solution, an online analytical processing (OLAP) engine, client analysis tools, and other applications that manage the process of gathering data and delivering it to business users.

Despite the fact that data warehouses can be designed in a number of different ways, they all share a number of important concepts.

A data warehouse is a :

- Subject-oriented : This means that the information that is in the data warehouse is stored in a way that allows it to be connected to objects or events which occur in reality.

- Integrated : Data warehouses must put data from disparate sources into a consistent format. They must resolve such problems as naming conflicts and inconsistencies among units of measure. When they achieve this, they are said to be integrated.

- Time-variant : A time variant will allow changes in the information to be monitored and recorded over time.

- Non-volatile : This means that it cannot be deleted, and must be held to be analyzed in the future. All of the programs that are used by a particular institution will be stored in the data warehouse, and it will be integrated together.

CHARACTERISTICS OF DATA WAREHOUSES :

- Multi-dimensional conceptual view.

- Generic dimensionality.

- Unlimited dimensions and aggression levels.

- Unrestricted cross-dimensional operations.

- Dynamic sparse matrix handling.

- Client-server architecture.

- Multi-user support.

- Accessibility.

- Transparency.

- Intuitive data manipulation.

- Consistent reporting performance.

- Flexible reporting.

Posted by

Sunflower

at

8/09/2009 11:53:00 PM

0

comments

![]()

Labels: Characteristics, Concepts, data warehousing, Process

|

| Subscribe by Email |

|

Relationships And Relationship Sets

A relationship is an association between several entities.

A relationship set is a set of relationships of the same type.

A relationship type R among n entity types E1, E2,....., En defines a set of associations or a relationship set among entities from these types.

In ER diagrams, relationship types are displayed as diamond-shaped boxes, which are connected by straight lines to the rectangular boxes representing the participating entity types. The relationship name is displayed in the diamond-shaped box.

RELATIONSHIP DEGREE : The degree of a relationship type is the number of participating entity types. A relationship type of degree two is called binary, and one with degree three is called ternary.

ROLE NAMES and RECURSIVE RELATIONSHIPS :

Role names signifies the role that a participating entity from the entity type plays in each relationship instance, and helps to explain what the relationship means.

Role names are not necessary in relationship types where all the participating entity types are distinct, since each entity type name can be used as a role name. However, in some cases, the same entity type participates more than once in a relationship type in different roles. In such cases the role name becomes essential for distinguishing the meaning of each participation. Such relationships are called recursive relationships.

MAPPING CONSTRAINTS :

An E-R scheme may define certain constraints to which the contents of a database must conform.

* Mapping Cardinality : It expresses the number of entities to which another entity can be associated via a relationship. For binary relationship sets between entity sets A and B, the mapping cardinality must be one of:

1. One-to-one: An entity in A is associated with at most one entity in B, and an entity in B is associated with at most one entity in A.

2. One-to-many: An entity in A is associated with any number in B. An entity in B is associated with at most one entity in A.

3. Many-to-one: An entity in A is associated with at most one entity in B. An entity in B is associated with any number in A.

4. Many-to-many: Entities in A and B are associated with any number from each other.

The appropriate mapping cardinality for a particular relationship set depends on the real world being modeled.

* Existence Dependencies: if the existence of entity X depends on the existence of entity Y, then X is said to be existence dependent on Y. (Or we say that Y is the dominant entity and X is the subordinate entity.)

For example,

o Consider account and transaction entity sets, and a relationship log between them.

o This is one-to-many from account to transaction.

o If an account entity is deleted, its associated transaction entities must also be deleted.

o Thus account is dominant and transaction is subordinate.

Posted by

Sunflower

at

8/09/2009 10:49:00 PM

0

comments

![]()

Labels: Binary degree, Constraints, Databases, Degree, ER model, Recursive, Relationship sets, Relationships, Ternary degree

|

| Subscribe by Email |

|

Thursday, August 6, 2009

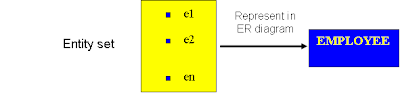

Entity - Relationship Model

The entity-relationship (ER) data model allows us to describe the data involved in a real-world enterprise in terms of objects and their relationships and is widely used to develop an initial database design. The ER model is important primarily for its role in database design. It provides useful concepts that allow us to move from an informal description of what users want from their database to a more detailed, and precise, description that can be implemented in a DBMS.

ENTITIES, ATTRIBUTES, AND ENTITY SETS

- A database can be modeled as:

o a collection of entities,

o relationship among entities.

- An entity is an object that exists and is distinguishable from other objects.

o Example: specific person, company, event, plant

- Entities have attributes.

o Example: people have names and addresses

- An entity set is a set of entities of the same type that share the same properties.

o Example: set of all persons, companies, trees, holidays.

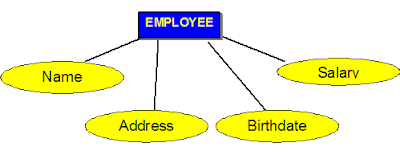

- Attributes : An entity is represented by a set of attributes, that is descriptive properties possessed by all members of an entity set.

o E.g. Employee = (Name, Address, Age, Salary)

Types of Attributes

- SIMPLE attributes are attributes that are drawn from the atomic value domains.

E.g. Name = {John} ; Age = {23}

- COMPOSITE attributes: Attributes that consist of a hierarchy of attributes.

E.g. Address may consists of “Number”, “Street” and “Suburb” → Address = {59 + ‘Meek Street’ + ‘Kingsford’}

- SINGLE VALUED attributes: Attributes that have only one value for each entity.

E.g. Name, Age for EMPLOYEE

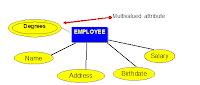

- MULTIVALUED attributes: Attributes that have a set of values for each entity.

E.g. Degrees of a person: ‘ BSc’ , ‘MIT’, ‘PhD’

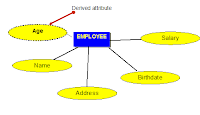

- DERIVED attributes: Attributes Contain values that are calculated from other attributes.

E.g. Age can be derived from attribute DateOfBirth. In this situation, DateOfBirth might be called Stored Attribute.

|  |  |

- DOMAIN/VALUE SETS : Each simple attribute of an entity type is associated with a value set which specifies the set of values that may be assigned to that attribute for each individual entity.

- KEYS : An important constraint on the entities is the key. An entity type usually has an attribute whose values are distinct for each individual entity in the collection. Such an attribute is called a key attribute.

For example, for the entity SET EMPLOYEE = {EID, Name, Address, Age, Salary}

EID attribute is the unique key for entity set EMPLOYEE as no two employees can have two same EID. Some entity types have more than one key attribute. An entity type may also have no key.

Posted by

Sunflower

at

8/06/2009 12:41:00 PM

0

comments

![]()

Labels: Attributes, Database design, Domain, Entity, Entity Set, Key, Types

|

| Subscribe by Email |

|

Conceptual Data Models for Database Design

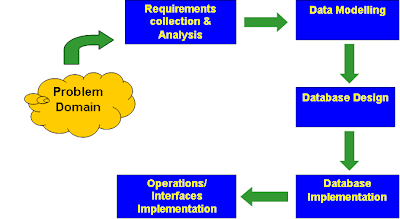

Conceptual modeling is an important phase in designing a successful database application. The database design process consists of a number of steps listed below:

Step 1: Requirements Collection and Analysis

- Prospective users are interviewed to understand and document data requirements

This step results in a concise set of user requirements, which should be detailed and complete.

- The functional requirements should be specified, as well as the data requirements. Functional requirements consist of user operations that will be applied to the database, including retrievals and updates.

- Functional requirements can be documented using diagrams such as sequence diagrams, data flow diagrams, scenarios, etc.

Step 2: Conceptual Design / Data Modeling

- Once the requirements are collected and analyzed, the designers go about creating the conceptual schema.

- Conceptual schema: concise description of data requirements of the users, and includes a detailed description of the entity types, relationships and constraints.

- The concepts do not include implementation details; therefore the end users easily understand them, and they can be used as a communication tool.

- The conceptual schema is used to ensure all user requirements are met, and they do not conflict.

Step 3: Database Design

- Two sub-steps called Database Logical Design which define a database in a data model of a specific DBMS and Database Physical Design which define the internal database storage structure are defined.

- It also defines file organization or indexing techniques.

Step 4: Database Implementation

- Many DBMS systems use an implementation data model, so the conceptual schema is transformed from the high-level data model into the implementation data model.

- This step is called logical design or data model mapping, which results in the implementation data model of the DBMS.

Step 5: Physical Design

- Internal storage structures, indexes, access paths and file organizations are specified.

- Application programs are designed and implemented.

Posted by

Sunflower

at

8/06/2009 12:09:00 PM

0

comments

![]()

Labels: Conceptual data model, conceptual design, Database design, database implementation, Databases, Design, physical design, Requirement analysis, Steps

|

| Subscribe by Email |

|

Tuesday, August 4, 2009

Database (DBMS) Languages

Once the design of the database is completed and a DBMS is chosen to implement the database, the next step is to define the conceptual and internal schema for the data and any mappings between the two. The languages that are used to do so are :

• Data Definition Language (DDL): To specify the conceptual schema of a database by the DBA and by database designers. In many DBMSs, the DDL is also used to define internal and external schemas (views).

• In some DBMSs, separate storage definition language (SDL) are used to define internal and external schemas.

The mappings between the two schemas may be specified in either one of these languages. For a true three-schema architecture, a third language is needed, the view definition language (VDL), to specify user views and their mappings to conceptual schema.

Once the database schemas are compiled and the database is populated with data, users must have some means to manipulate the data.

• Data Manipulation Language (DML): Used to specify database retrievals and updates.

• DML commands (data sublanguage) can be embedded in a general-purpose programming language (hostlanguage), such as COBOL, C or an Assembly Language.

• Alternatively, stand-alone DML commands can be applied directly (query language).

DMLs can be high level(set-oriented, nonprocedural) or low level)record-oriented, procedural). A high-level DML can be embedded in a host programming language, or it can be used as a stand-alone language; in the latter case it is often called as a query language.

In current DBMSs, a comprehensive integrated language is used that includes constructs for conceptual schema definition, view definition, and data manipulation. A typical example of a comprehensive database language is the SQL relational database language.

Whenever DML commands are embedded in a general-purpose programming language, that language is called the host language and the DML is called the data sublanguage.

Posted by

Sunflower

at

8/04/2009 09:21:00 AM

0

comments

![]()

Labels: data definition language, data manipulation language, DBMS, DBMS languages, DDL, DML

|

| Subscribe by Email |

|

DBMS Three-Schema Architecture and Data Independence

WHAT IS DBMS ?

- To be able to carry out operations like insertion, deletion and retrieval, the database needs to be managed by a substantial piece of software; this software is usually called a Database Management System(DBMS).

- A DBMS is usually a very large software package that enables many different tasks including the provision of facilities to enable the user to access and modify information in the database.

- Data Description Languages (DDL) and Data Manipulation Languages (DML) are needed for manipulating and retrieving data stored in the DBMS. These languages are called respectively.

An architecture for database systems, called the three-schema architecture was proposed to help achieve and visualize the important characteristics of the database approach.

THE THREE-SCHEMA ARCHITECTURE:

The goal of the three-schema architecture is to separate the user applications and the physical database. In this architecture, schemas can be defined at 3 levels :

1. Internal level or Internal schema : Describes the physical storage structure of the database. The internal schema uses a physical data model and describes the complete details of data storage and access paths for the database.

2. Conceptual level or Conceptual schema : Describes the structure of the whole database for a community of users. It hides the details of physical storage structures and concentrates on describing entities, data types, relationships, user operations, and constraints. Implementation data model can be used at this level.

3. External level or External schema : It includes a number of external schemas or user views. Each external schema describes the part of the database that a particular user is interested in and hides the rest of the database from user. Implementation data model can be used at this level.

IMPORTANT TO REMEMBER :

Data and meta-data

- three schemas are only meta-data(descriptions of data).

- data actually exists only at the physical level.

Mapping

- DBMS must transform a request specified on an external schema into a request against the conceptual schema, and then into the internal schema.

- requires information in meta-data on how to accomplish the mapping among various levels.

- overhead(time-consuming) leading to inefficiencies.

- few DBMSs have implemented the full three-schema architecture.

DATA INDEPENDENCE

The disjointing of data descriptions from the application programs (or user-interfaces) that uses the data is called data independence. Data independence is one of the main advantages of DBMS. The three-schema architecture provides the concept of data independence, which means that upper-levels are unaffected by changes to lower-levels. The three schemas architecture makes it easier to achieve true data independence. There are two kinds of data independence.

- Physical data independence

* The ability to modify the physical scheme without causing application programs to be rewritten.

* Modifications at this level are usually to improve performance.

- Logical data independence

* The ability to modify the conceptual scheme without causing application programs to be rewritten.

* Usually done when logical structure of database is altered.

Logical data independence is harder to achieve as the application programs are usually heavily dependent on the logical structure of the data. An analogy is made to abstract data types in programming languages.

Posted by

Sunflower

at

8/04/2009 08:37:00 AM

0

comments

![]()

Labels: Architecture, Conceptual schema, Data independence, Database Management system, Databases, DBMS, External schema, Internal, Logical, Physical, three-schema architecture

|

| Subscribe by Email |

|

Monday, August 3, 2009

Database System Concepts - Data Model, Schemas and Database state

A data model is a collection of concepts that can be used to describe the structure of a database. By structure of the database we mean the data types, relationships, and constraints that should hold on the data. Most data models also include a set of basic operations for specifying retrievals and updates on database.

Categories of Data Models:

- High level or Conceptual data models : These models provide concepts that are close to the way many users perceive data. They use concepts such as entities, attributes, and relationships. An entity represents a real-world object or concept such as an employee or a project. An attribute represents property of interest that describes an entity such as employee's salary or name. A relationship represents an interaction among the entities.

- Representational data models : These models provides concept that may be understood by end users but that are not too far removed from the way data is organized within the computer. They are used most frequently in traditional commercial DBMSs and they include the widely used relational model as well as the network and hierarchical models. These models represent data by using record structures and hence are sometimes called record-based data models.

- Low level or Physical data models : These models provide concepts that describe the details of how the data is stored in the computer by representing information such as record formats, record orderings, and access paths. An access path is a structure that makes the search for particular database records efficient.

Schemas:

The description of a database in any data model is called the database schema which is specified during the database design and is not expected to change frequently. A displayed is called a schema diagram.

A schema diagram displays only some aspects of a schema, such as names of record types and data items, and some types of constraints.

Database State or Iinstance: The actual data in a database changes every time data is inserted, deleted, or modified. The data in the database at a particular moment in time is called a database state or a snapshot. It is also called the current set of occurrences or instances in the database.

Distinguish between Database State and Database Schema:

When a new database is defined, we specify its database schema only to the DBMS. At this point, the corresponding database state is empty state. The initial state of the database is got when the database is first populated or loaded with the initial data. From then on, every time an update operation is applied to the database, we get another database state.

Posted by

Sunflower

at

8/03/2009 11:03:00 AM

0

comments

![]()

Labels: Categories, Conceptual data model, Data Model, Database state, Databases, Instance, physical data model, representational data model, Schemas

|

| Subscribe by Email |

|

Introduction to Databases

Databases play an important role in almost all areas where they are used including business, engineering, medicine, law, education, and library science, to name a few.

A database is a collection of related data, where data means recorded facts. A typical database represents some aspect of the real world and is used for specific purposes by one or more groups of users. Databases are not specific to computers. Examples of non-computerized databases abound: phone book, dictionaries, almanacs, etc. A database has the following implicit properties :

1. A database represents some aspect of the real world.

2. A database is a logically coherent collection of data with some inherent meaning.

3. A databse is designed, built, and populated with data for a specific purpose.

4. A databse can be of any size and of varying complexity.

5. A database may be generated and maintained manually or it may be computerized.

A database management system (DBMS) is a collection of programs that enables users to create and maintain a database. The DBMS is a general-purpose software system that facilitates the process of defining, construction, and manipulating databases for different applications.

Defining a database involves specifying the data types, structures, and constraints for the data to be stored in the database.

Constructing a database is the process of storing the data itself on some storage medium that is controlled by the DBMS.

Manipulating a database includes such functions as querying the database to retrieve specific data, updating the database and generating the reports from the data.

CHARACTERSTICS THAT DISTINGUISH DATABASE APPROACH FROM TRADITIONAL FILE-PROCESSING APPLICATIONS :

- Existence of a catalog : It contains information such as structure of each file, the type and storage format of each data item and various constraints on the data. The information stored in catalog is called meta-data.

- Program data independence : In traditional file processing, the structure of a file is embedded in the access programs, so any changes to the structure of a file may require changing all programs that access this file. By contrast, the structure of data files is stored in DBMS catalog separately from access programs. This property is called program data independence.

- Program operation independence: Users can define operations on data as part of database applications. An operation is specified in two parts - interface of operation : includes operation name and data types of its arguments, implementation of operation : specified separately and can be changed without affecting the interface. This is called

program operation independence.

- Data abstraction : The characteristic that allows program data independence and program operation independence is called data abstraction.

- Support of multiple user views.

- Sharing of data among multiple transactions.

Main Categories of Database users are :

- Administrators.

- Designers.

- End users.

- System analysts and application programmers.

- DBMS system designers and implementers.

- Tool Developers.

- Operators and maintenance personnel.

Advantages of using Databases :

- Potential for enforcing standards.

- Reduced application development time.

- Flexibility.

- Availability of up-to-date information to all users.

- Economies of sale.

Posted by

Sunflower

at

8/03/2009 09:32:00 AM

0

comments

![]()

Labels: Advantages, Data, Databases, File processing systems, introduction, Properties, traditional

|

| Subscribe by Email |

|

Saturday, August 1, 2009

Overview Of The Application Layer

Computer networks are inherently insecure. To keep information secret, it must be encrypted. Encryption protocols fall into two general classes: secret key (e.g. DES, IDEA), and public key (e.g. RSA). Using these protocols is straight-forward; the hard part is key management.

In addition to providing secrecy, cryptographic protocols can also provide authentication. Finally, cryptography can also be used to allow messages to be signed in such a way that the sender cannot repudiate them after they have been sent. Naming in the Internet uses a distributed database system, DNS. Domain Name Server(DNS) holds records with IP addresses, mail exchanges, and other information. By querying a DNS server, a process can map an Internet domain name onto the IP address used to communicate with that domain.

As networks grow larger, they become harder to manage. For this reason, special network management systems and protocols have been devised, the most popular of which is SNMP. This protocol allows managers to communicate with agents inside devices to read out their status and issue commands to them.

Four major network applications are electronic mail, USENET news, the World Wide Web, and multimedia. Most email systems use the mail system defined in RFCs 821 and 822. Messages sent in this system use system ASCII headers to define message properties. These messages are sent using SMTP. Two systems for securing email exist, PGP and PEM.

USENET news consists of thousands of newsgroups on all manner of topics. People can join newsgroups locally, and can then post messages all over the world using the NNTP protocol, which has some resemblance to SMTP.

The World Wide Web is a system for linking up hypertext documents. Each document is a page written in HTML, possible with hyperlinks to other documents. A browser can display a document by establishing a TCP connection to its server, asking for the document, and then closing the connection. When a hyperlink is selected by the user, that document can also be fetched in the same way. In this manner, documents all over the world are linked together in a giant web.

Multimedia is the rising star in the networking firmament. It allows audio and video to be digitized and transported electronically for display. Most multimedia projects use the MPEG standards and transmit the data over ATM connections.

Posted by

Sunflower

at

8/01/2009 10:06:00 AM

0

comments

![]()

Labels: Computer networks, Cryptography, DNS, Security, The Application layer

|

| Subscribe by Email |

|

Overview Of The Transport Layer

The transport layer is the key to understanding layered protocols. It provides various services, the most important of which is an end-to-end, reliable, connection-oriented byte stream from sender to receiver. It is accessed through service primitives that permit the establishment, use and release of connection.

Transport protocols must be able to do connection management over unreliable networks. Connection establishment is complicated by the existence of delayed duplicate packets that can reappear at inopportune moments. To deal with them, three-way handshakes are needed to establish connections. Releasing a connection is easier than establishing one, but is still far from trivial due to the two-army problem.

Even when the network layer is completely reliable, the transport layer has plenty of work to do. It must handle all the service primitives, manage connections and timers, and allocate and utilize credits.

The main Internet transport protocol is TCP. It uses a 20-byte header on all segments. Segments can be fragmented by routers within the Internet, so hosts must be prepared to do reassembly. A great deal of work has gone into optimizing TCP performance, using algorithms from Nagle, Clark, Jacobson, Karn and others.

ATM has four protocols in the AAL layer. All of them break messages into cells at the source and reassemble the cells into messages at the destination. The CS and SAR sublayers add their own headers and trailers in various ways, leaving from 44 to 48 bytes of cell payload.

Network performance is typically dominated by protocol and TPDU processing overhead, and the situation gets worse at higher speeds. Protocols should be designed to minimize the number of TPDUs, context switches, and times each TPDU is copied. For gigabit networks, simple protocols using rate, rather than credit, flow control are called for.

Posted by

Sunflower

at

8/01/2009 09:47:00 AM

0

comments

![]()

Labels: ATM, Computer networks, Protocols, Transport layer

|

| Subscribe by Email |

|

Overview Of The Medium Access Sublayer

Some networks have a single channel that is used for all communication. In these networks, the key design issue is the allocation of this channel among the competing stations wishing to use it. Numerous channel allocation algorithms have been devised like :

- FDM : Dedicate a frequency band to each station.

- TDM : Dedicate a time slot to each station.

- Pure ALOHA : Unsynchronized transmission at any instant.

- Slotted ALOHA : Random transmission in well defined time slots.

- 1-persistent CSMA : Standard carrier sense multiple access.

- Nonpersistent CSMA : Random delay when channel is sensed busy.

- P-persistent CSMA : CSMA, but with probability of p of persisting.

- CSMA/CD : CSMA. but abort on detecting a collision.

- Bit Map : Round robin scheduling using a bit map.

- Binary countdown : Highest numbered ready station goes next.

- Tree walk : Reduced contention by selective enabling.

- Wavelength division : A dynamic FDM scheme for fiber.

- MACA, MACAW : Wireless LAN protocols.

- GSM : FDM plus TDM for cellular radio.

- CDPD : Packet radio within an AMPS channel.

- CDMA : Everybody speak at once but in different language.

- Ethernet : CSMA/CD with binary exponential backoff.

- Token bus : Logical ring on a physical bus.

- Token Ring : Capture the token to send a frame.

- DQDB : Distributed queuing on a two-bus MAN.

- FDDI : Fiber-optic token ring.

- HIPPI : Crossbar using 50-100 twisted pairs.

- Fibre channel : Crossbar using fiber optics.

- SPADE : FDM with dynamic channel allocation.

- ACTS : TDM with centralized slot allocation.

- Binder : TDM with ALOHA when slot owner is not interested.

- Crowther : ALOHA with slot owner getting to keep it.

- Roberts : Channel time reserved in advance by ALOHA.

FDM and TDM are efficient when the number of stations is small and the traffic is continous.

ALOHA protocol, with and without slotting and control, has been proposed when the number of stations is large and variable.

BINARY COUNTDOWN completely eliminates contention.

TREE WALK reduces contention by dynamically dividing the stations into two disjoint groups, one of which is permitted to transmit and one of which is not.

WIRELESS LANs have their own problems and solutions. The biggest problem is caused by hidden stations, so CSMA does not work. MACA attempts to stimulate transmissions around the destination, to make CSMA work better.

GSM, CDPD and CDMA are widely used for mobile computers and telephones.

The IEEE 802 LANs are : CSMA/CD, TOKEN BUS, and TOKEN RING. Each of these has its unique advantages and disadvantages, and each has found its own user community.

FDDI, FAST ETHERNET, HIPPI, and FIBER CHANNEL offer bandwidth in the 100 Mbps range and up.

Posted by

Sunflower

at

8/01/2009 09:04:00 AM

0

comments

![]()

Labels: ALOHA, CDMA, CDPD, Channels allocation algorithms, Computer networks, CSMA, FDM, GSM, Medium Access SubLayer, TDM

|

| Subscribe by Email |

|